Showing archive for: “Innovation & Entrepreneurship”

Should the Federal Government Regulate Artificial Intelligence?

Artificial intelligence is in the public-policy spotlight. In October 2023, the Biden administration issued its Presidential Executive Order on AI, which directed federal agencies to cooperate in protecting the public from potential AI-related harms. President Joe Biden said in his March 2024 State of the Union Address that government enforcers will crack down on the ... Should the Federal Government Regulate Artificial Intelligence?

Chris DeMuth Jr: Perspectives on Antitrust from Financial Markets and Venture Capital

How much do you take potential antitrust concerns into account when evaluating investments or mergers and acquisitions? Has this changed over time? Antitrust is a big part of M&A and the work I do in analyzing deals at Rangeley Capital. It has always been important, but the importance has grown with this administration’s activist approach. ... Chris DeMuth Jr: Perspectives on Antitrust from Financial Markets and Venture Capital

Section 214: Title II’s Trojan Horse

The Federal Communications Commission (FCC) has proposed classifying broadband internet-access service as a common carrier “telecommunications service” under Title II of the Communications Act. One major consequence of this reclassification would be subjecting broadband providers to Section 214 regulations that govern the provision, acquisition, and discontinuation of communication “lines.” In the Trojan War, the Greeks ... Section 214: Title II’s Trojan Horse

Biden’s AI Executive Order Sees Dangers Around Every Virtual Corner

Here in New Jersey, where I live, the day before Halloween is commonly celebrated as “Mischief Night,” an evening of adolescent revelry and light vandalism that typically includes hurling copious quantities of eggs and toilet paper. It is perhaps fitting, therefore, that President Joe Biden chose Oct. 30 to sign a sweeping executive order (EO) ... Biden’s AI Executive Order Sees Dangers Around Every Virtual Corner

Dynamic Competition Proves There Is No Captive Audience: 10 Years, 10G, and YouTube TV

In Susan Crawford’s 2013 book “Captive Audience: The Telecom Industry and Monopoly Power in the New Gilded Age,” the Harvard Law School professor argued that the U.S. telecommunications industry had become dominated by a few powerful companies, leading to limited competition and negative consequences for consumers, especially for broadband internet. Crawford’s ire was focused particularly ... Dynamic Competition Proves There Is No Captive Audience: 10 Years, 10G, and YouTube TV

FTC v Amgen: The Economics of Bundled Discounts, Part One

The Federal Trade Commission (FTC) recently announced that it would seek to block Amgen’s proposed $27.8 billion acquisition of Horizon Therapeutics. The move was the culmination of several years’ worth of increased scrutiny from both Congress and the FTC into antitrust issues in the biopharmaceutical industry. While the FTC’s move didn’t elicit much public comment, ... FTC v Amgen: The Economics of Bundled Discounts, Part One

What the European Commission’s More Interventionist Approach to Exclusionary Abuses Could Mean for EU Courts and for U.S. States

The European Commission on March 27 showered the public with a series of documents heralding a new, more interventionist approach to enforce Article 102 of the Treaty on the Functioning of the European Union (TFEU), which prohibits “abuses of dominance.” This new approach threatens more aggressive, less economically sound enforcement of single-firm conduct in Europe. ... What the European Commission’s More Interventionist Approach to Exclusionary Abuses Could Mean for EU Courts and for U.S. States

Is Market Concentration Actually Rising?

Everyone is worried about growing concentration in U.S. markets. President Joe Biden’s July 2021 executive order on competition begins with the assertion that “excessive market concentration threatens basic economic liberties, democratic accountability, and the welfare of workers, farmers, small businesses, startups, and consumers.” No word on the threat of concentration to baby puppies, but the ... Is Market Concentration Actually Rising?

Patent Pools, Innovation, and Antitrust Policy

Late last month, 25 former judges and government officials, legal academics and economists who are experts in antitrust and intellectual property law submitted a letter to Assistant Attorney General Jonathan Kanter in support of the U.S. Justice Department’s (DOJ) July 2020 Avanci business-review letter (ABRL) dealing with patent pools. The pro-Avanci letter was offered in ... Patent Pools, Innovation, and Antitrust Policy

How Not to Use Industrial Policy to Promote Europe’s Digital Sovereignty

The concept of European “digital sovereignty” has been promoted in recent years both by high officials of the European Union and by EU national governments. Indeed, France made strengthening sovereignty one of the goals of its recent presidency in the EU Council. The approach taken thus far both by the EU and by national authorities ... How Not to Use Industrial Policy to Promote Europe’s Digital Sovereignty

The FTC’s Pre-Acquisition Review Requirement for All Meta Deals: Hyper-Regulatory, Anti-Free Market, Anti-Rule of Law, and Anti-Consumer

The Federal Trade Commission (FTC) wants to review in advance all future acquisitions by Facebook parent Meta Platforms. According to a Sept. 2 Bloomberg report, in connection with its challenge to Meta’s acquisition of fitness-app maker Within Unlimited, the commission “has asked its in-house court to force both Meta and [Meta CEO Mark] Zuckerberg to ... The FTC’s Pre-Acquisition Review Requirement for All Meta Deals: Hyper-Regulatory, Anti-Free Market, Anti-Rule of Law, and Anti-Consumer

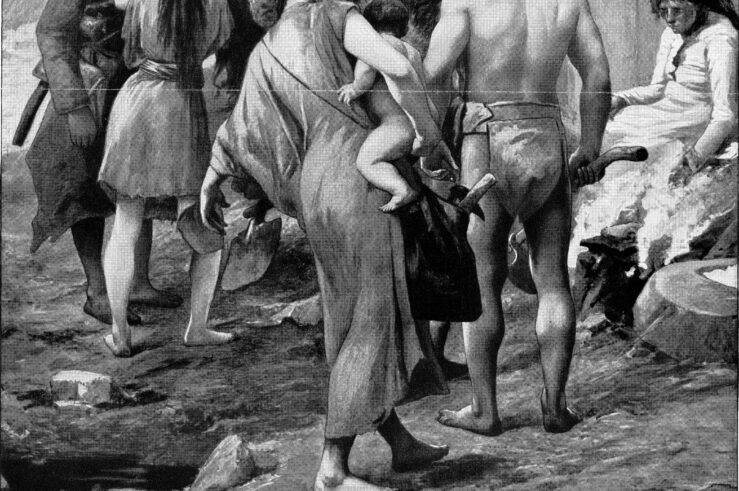

What Antitrust Scholars Can Learn from the Bronze Age Collapse

There is an emerging debate regarding whether complexity theory—which, among other things, draws lessons about uncertainty and non-linearity from the natural sciences—should make inroads into antitrust (see, e.g., Nicolas Petit and Thibault Schrepel, 2022). Of course, one might also say that antitrust is already quite late to the party. Since the 1990s, complexity theory has ... What Antitrust Scholars Can Learn from the Bronze Age Collapse