Showing archive for: “Digital Divide”

How This Supreme Court Term Might Affect the FCC’s Digital-Discrimination Rule

The recently completed U.S. Supreme Court session appears to have upended the administrative state in some pretty fundamental ways. While Loper Bright’s overruling of Chevron attracted the most headlines and hand-wringing, Jarkesy will have far-reaching effects across both the executive and judicial branches. Even seemingly “small” matters such as Ohio v. EPA and Corner Post ... How This Supreme Court Term Might Affect the FCC’s Digital-Discrimination Rule

FCC’s Digital-Discrimination Rules: Bridging the Divide or a Bridge Too Far?

The Federal Communications Commission’s (FCC) recently enacted rules to prevent so-called “digital discrimination” in broadband access are facing a significant legal challenge in the 8th U.S. Circuit Court of Appeals. Earlier this week, the U.S. Justice Department and the FCC submitted their brief on the matter. Now that the parties have made their “opening arguments” ... FCC’s Digital-Discrimination Rules: Bridging the Divide or a Bridge Too Far?

Clearing the Telecom Logjam: A Modest Proposal

In this “Age of the Administrative State,” federal agencies have incredible latitude to impose policies without much direction or input from Congress. President Barack Obama fully pulled off the mask in 2014, when he announced “[w]e are not just going to be waiting for legislation,” declaring “I’ve got a pen, and I’ve got a phone.” ... Clearing the Telecom Logjam: A Modest Proposal

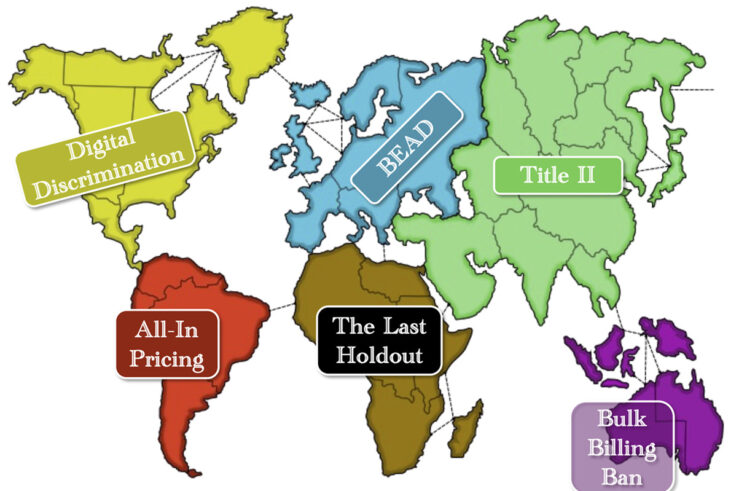

It’s Risk, Jerry, The Game of Broadband Conquest

The big news in telecommunications policy last week wasn’t really news at all—the Federal Communications Commission (FCC) released its proposed rules to classify broadband internet under Title II of the Communications Act. Supporters frame the proposed rules as “net neutrality,” but those provisions—a ban on blocking, throttling, or engaging in paid or affiliated-prioritization arrangements—actually comprise ... It’s Risk, Jerry, The Game of Broadband Conquest

Slouching Toward Disconnection and the End of the ACP

It’s our first post of the New Year, and we’re having a hard time feeling the Hootenanny vibes. Rather than Congress taking a “new year, new you” approach to telecom policy, it seems that D.C. is starting the year with the “same old, same old” of brinkmanship. This time, with broadband subsidies. The Affordable Connectivity ... Slouching Toward Disconnection and the End of the ACP

Gotta Go Fast: Sonic the Hedgehog Meets the FCC

Federal Communications Commission (FCC) Chair Jessica Rosenworcel this week announced a notice of inquiry (NOI) seeking input on a proposal to raise the minimum connection-speed benchmarks that the commission uses to define “broadband.” The current benchmark speed is 25/3 Mbps. The chair’s proposal would raise the benchmark to 100/20 Mbps, with a goal of having ... Gotta Go Fast: Sonic the Hedgehog Meets the FCC

Everyone Discriminates Under the FCC’s Proposed New Rules

The Federal Communications Commission’s (FCC) proposed digital-discrimination rules hit the streets earlier this month and, as we say at Hootenanny Central, they’re a real humdinger. It looks like the National Telecommunications and Information Agency (NTIA) got most of their wishlist incorporated into the proposed rules. We’ve got disparate impact and a wide-open door for future ... Everyone Discriminates Under the FCC’s Proposed New Rules

Gomez Confirmed to FCC: Here Comes Net Neutrality, But First…

The U.S. Senate moved yesterday in a 55-43 vote to confirm Anna Gomez to the Federal Communications Commission. Her confirmation breaks a partisan deadlock at the agency that has been in place since the beginning of the Biden administration, when Commissioner Jessica Rosenworcel vacated her seat to become FCC chair. The commission now has a ... Gomez Confirmed to FCC: Here Comes Net Neutrality, But First…

ACP Spends More Money While Running Out of Money; BEAD Rules Run Amok

If this is what a summer slowdown looks like in telecom policy world, then autumn is going to be a real humdinger. FCC Announces More Spending for ACP Outreach Last week, the Federal Communications Commission (FCC) announced government agencies and nonprofits in 11 states and territories will receive an additional $4.3 million to promote the ... ACP Spends More Money While Running Out of Money; BEAD Rules Run Amok

Red Tape and Headaches Plague BEAD Rollout

While the dog days of August have sent many people to the pool to cool off, the Telecom Hootenanny dance floor is heating up. We’ve got hiccups in BEAD deployment, a former Federal Communications Commission (FCC) member urging the agency to free-up 12 GHz spectrum for fixed wireless, and another former FCC commissioner urging a ... Red Tape and Headaches Plague BEAD Rollout

Broadband Deployment, Pole Attachments, & the Competition Economics of Rural-Electric Co-ops

In our recent issue brief, Geoffrey Manne, Kristian Stout, and I considered the antitrust economics of state-owned enterprises—specifically the local power companies (LPCs) that are government-owned under the authority of the Tennessee Valley Authority (TVA). While we noted that electricity cooperatives (co-ops) do not receive antitrust immunities and could therefore be subject to antitrust enforcement, we ... Broadband Deployment, Pole Attachments, & the Competition Economics of Rural-Electric Co-ops

Will the USF Survive the 5th Circuit?

The Telecom Hootenanny is back from a little summer break. As they say on AM radio: “If you miss a little, you miss a lot.” So rather than trying to catch up, let’s focus on some of the latest news from the telecom dancefloor. For this edition of the Hootenanny: we’ve got a big-time challenge ... Will the USF Survive the 5th Circuit?