Showing archive for: “Advertising”

The View from the United Kingdom: A TOTM Q&A with John Fingleton

What is the UK doing in the field of digital-market regulation, and what do you think it is achieving? There are probably four areas to consider. The first is that the UK’s jurisdiction on mergers increased with Brexit. The UK is not subject to the same turnover threshold as under European law, and this enables ... The View from the United Kingdom: A TOTM Q&A with John Fingleton

The Missing Element in the Google Case

Through laudable competition on the merits, Google achieved a usage share of nearly 90% in “general search services.” About a decade later, the government alleged that Google had maintained its dominant share through exclusionary practices violating Section 2 of the Sherman Antitrust Act. The case was tried in U.S. District Court in Washington, D.C. last ... The Missing Element in the Google Case

The Broken Promises of Europe’s Digital Regulation

If you live in Europe, you may have noticed issues with some familiar online services. From consent forms to reduced functionality and new fees, there is a sense that platforms like Amazon, Google, Meta, and Apple are changing the way they do business. Many of these changes are the result of a new European regulation ... The Broken Promises of Europe’s Digital Regulation

The View from Turkey: A TOTM Q&A with Kerem Cem Sanli

How did you come to be interested in the regulation of digital markets? I am a full-time professor in competition law at Bilgi University in Istanbul. I first became interested in the application of competition law in digital markets when a PhD student of mine, Cihan Dogan, wrote his PhD thesis on the topic in ... The View from Turkey: A TOTM Q&A with Kerem Cem Sanli

Shining the Light of Economics on the Google Case

The U.S. Justice Department has presented its evidence in the antitrust case alleging that Google unlawfully maintained a monopoly over “general search services” by “lock[ing] up distribution channels” through “exclusionary agreements” with makers and marketers of devices. Google’s agreements with Apple, for example, have made its search engine the default in Apple’s Safari browser. The ... Shining the Light of Economics on the Google Case

FTC’s Amazon Complaint: Perhaps the Greatest Affront to Consumer and Producer Welfare in Antitrust History

“Seldom in the history of U.S. antitrust law has one case had the potential to do so much good [HARM] for so many people.” – Federal Trade Commission (FTC) Bureau of Competition Deputy Director John Newman, quoted in a Sept. 26 press release announcing the FTC’s lawsuit against Amazon (correction IN ALL CAPS is mine) ... FTC’s Amazon Complaint: Perhaps the Greatest Affront to Consumer and Producer Welfare in Antitrust History

The FTC Tacks Into the Gale, Battening No Hatches: Part 1

The Evolution of FTC Antitrust Enforcement – Highlights of Its Origins and Major Trends 1910-1914 – Creation and Launch The election of 1912, which led to the creation of the Federal Trade Commission (FTC), occurred at the apex of the Progressive Era. Since antebellum times, Grover Cleveland had been the only Democrat elected as president. ... The FTC Tacks Into the Gale, Battening No Hatches: Part 1

The FTC’s Gambit Against Amazon: Navigating a Multiverse of Blowback and Consumer Harm

The Federal Trade Commission (FTC) is reportedly poised some time within the next month to file a major antitrust lawsuit against Amazon—the biggest yet against the company and the latest in a long string of cases targeting U.S. tech firms (see, for example, here and here). While specific details of the suit remain largely unknown ... The FTC’s Gambit Against Amazon: Navigating a Multiverse of Blowback and Consumer Harm

Norwegian Decision Banning Behavioral Advertising on Facebook and Instagram

The Norwegian Data Protection Authority (DPA) on July 14 imposed a temporary three-month ban on “behavioural advertising” on Facebook and Instagram to users based in Norway. The decision relied on the “urgency procedure” under the General Data Protection Regulation (GDPR), which exceptionally allows direct regulatory interventions by other national authorities than the authority of the country ... Norwegian Decision Banning Behavioral Advertising on Facebook and Instagram

The CJEU’s Decision in Meta’s Competition Case: Consequences for Personalized Advertising Under the GDPR (Part 1)

Today’s judgment from the Court of Justice of the European Union (CJEU) in Meta’s case (Case C-252/21) offers new insights into the complexities surrounding personalized advertising under the EU General Data Protection Regulation (GDPR). In the decision, in which the CJEU gave the green light to an attempt by the German competition authority (FCO) to ... The CJEU’s Decision in Meta’s Competition Case: Consequences for Personalized Advertising Under the GDPR (Part 1)

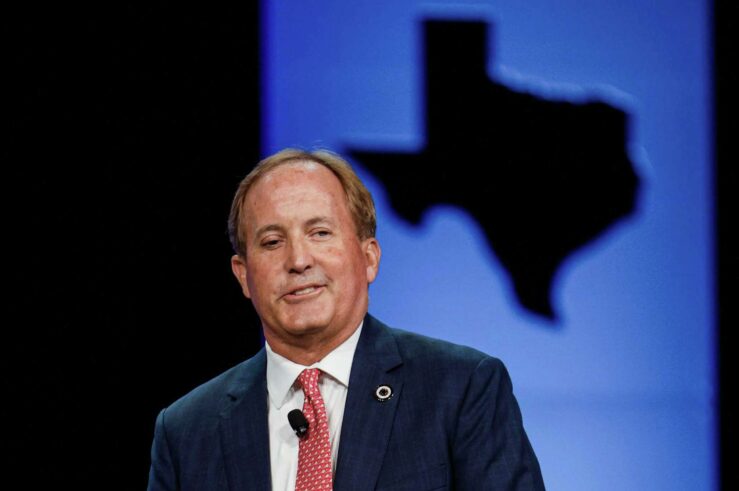

AG Paxton’s Google Suit Makes the Perfect the Enemy of the Good

Having just comfortably secured re-election to a third term, embattled Texas Attorney General Ken Paxton is likely to want to change the subject from investigations of his own conduct to a topic where he feels on much firmer ground: the 16-state lawsuit he currently leads accusing Google of monopolizing a segment of the digital advertising ... AG Paxton’s Google Suit Makes the Perfect the Enemy of the Good

Noah Phillips’ Major Contribution to IP-Antitrust Law: The 1-800 Contacts Case

Recently departed Federal Trade Commission (FTC) Commissioner Noah Phillips has been rightly praised as “a powerful voice during his four-year tenure at the FTC, advocating for rational antitrust enforcement and against populist antitrust that derails the fair yet disruptive process of competition.” The FTC will miss his trenchant analysis and collegiality, now that he has ... Noah Phillips’ Major Contribution to IP-Antitrust Law: The <em>1-800 Contacts</em> Case