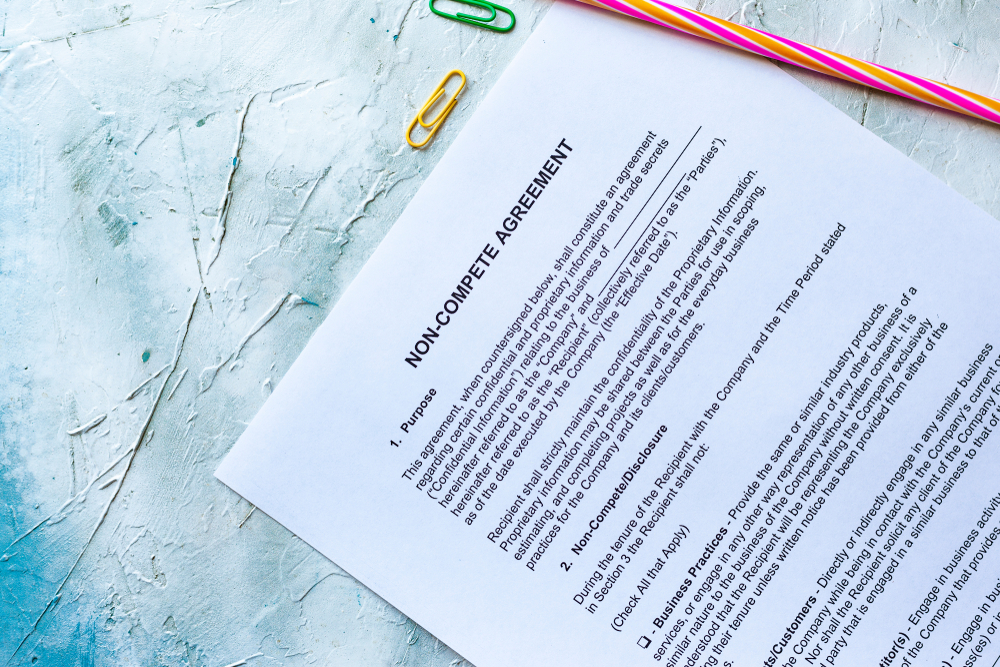

Under a recently proposed rule, the Federal Trade Commission (FTC) would ban the use of noncompete terms in employment agreements nationwide. Noncompetes are contracts that workers sign saying they agree to not work for the employer’s competitors for a certain period. The FTC’s rule would be a major policy change, regulating future contracts and retroactively voiding current ones. With limited exceptions, it would cover everyone in the United States.

When I scan academic economists’ public commentary on the ban over the past few weeks (which basically means people on Twitter), I see almost universal support for the FTC’s proposed ban. You see similar support if you expand to general econ commentary, like Timothy Lee at Full Stack Economics. Where you see pushback, it is from people at think tanks (like me) or hushed skepticism, compared to the kind of open disagreement you see on most policy issues.

The proposed rule grew out of an executive order by President Joe Biden in 2021, which I wrote about at the time. My argument was that there is a simple economic rationale for the contract: noncompetes encourage both parties to invest in the employee-employer relationship, just like marriage contracts encourage spouses to invest in each other.

Somehow, reposting my newsletter on the economic rationale for noncompetes has turned me into a “pro-noncompete guy” on Twitter.

The discussions have been disorienting. I feel like I’m taking crazy pills! If you ask me, “what new thing should policymakers do to address labor market power?” I would probably say something about noncompetes! Employers abuse them. The stories are devastating about people unable to find a new job because noncompetes bind them.

Yet, while recognizing the problems with noncompetes, I do not support the complete ban.

That puts me out of step with most vocal economics commentators. Where does this disagreement come from? How do I think about policy generally, and why am I the odd one out?

My Interpretation of the Research

One possibility is that I’m not such a lonely voice, and that the sample of vocal Twitter users is biased toward particular policy views. The University of Chicago Booth School of Business’ Initiative on Global Markets recently conducted a poll of academic economists about noncompetes, which mostly finds differing opinions and levels of certainty about the effects of a ban. For example, 43% were uncertain that a ban would generate a “substantial increase in wages in the affected industries.” However, maybe that is because the word substantial is unclear. That’s a problem with these surveys.

Still, more economists surveyed agreed than disagreed. I would answer “disagree” to that statement, as worded.

Why do I differ? One cynical response would be that I don’t know the recent literature, and my views are outdated. From the research I’ve done for a paper that I’m writing on labor-market power, I’m fairly well-versed in the noncompete literature. I don’t know it better than the active researchers in the field, but better than the average economists responding to the FTC’s proposal and definitely better than most lawyers. My disagreement also isn’t about me being some free-market fanatic. I’m not, and some other free-market types are skeptical of noncompetes. My priors are more complicated (critics might say “confused”) than that, as I will explain below.

After much soul-searching, I’ve concluded that the disagreement is real and results from my—possibly weird—understanding of how we should go from the science of economics to the art of policy. That’s what I want to explain today and get us to think more about.

Let’s start with the literature and the science of economics. First, we need to know “the facts.” The original papers focused a lot on collecting data and facts about noncompetes. We don’t have amazing data on the prevalence of noncompetes, but we know something, which is more than we could say a decade ago. For example, Evan Starr, J.J. Prescott, & Norman Bishara (2021) conducted a large survey in which they found that “18 percent of labor force participants are bound by noncompetes, with 38 percent having agreed to at least one in the past.”[1] We need to know these things and thank the researchers for collecting data.

With these facts, we can start running regressions. In addition to the paper above, many papers develop indices of noncompete “enforceability” by state. Then we can regress things like wages on an enforceability index. Many papers—like Starr, Prescott, & Bishara above—run cross-state regressions and find that wages are higher in states with higher noncompete enforceability. They also find more training with noncompete enforceability. But that kind of correlation is littered with selection issues. High-income workers are more likely to sign noncompetes. That’s not causal. The authors carefully explain this, but sometimes correlations are the best we have—e.g., if we want to study noncompetes on doctors’ wages and their poaching of clients.

Some people will simply point to California (which has banned noncompetes for decades) and say, “see, noncompete bans don’t destroy an economy.” Unfortunately, many things make California unique, so while that is evidence, it’s hardly causal.

The most credible results come from recent changes in state policy. These allow us to run simple difference-in-difference types of analysis to uncover causal estimates. These results are reasonably transparent and easy to understand.

Michael Lipsitz & Evan Starr (2021) (are you starting to recognize that Starr name?) study a 2008 Oregon ban on noncompetes for hourly workers. They find the ban increased hourly wages overall by 2 to 3%, which implies that those signing noncompetes may have seen wages rise as much as 14 to 21%. This 3% number is what the FTC assumes will apply to the whole economy when they estimate a $300 billion increase in wages per year under their ban. It’s a linear extrapolation.

Similarly, in 2015, Hawaii banned noncompetes for new hires within tech industries. Natarajan Balasubramanian et al. (2022) find that the ban increased new-hire wages by 4%. They also estimate that the ban increased worker mobility by 11%. Labor economists generally think of worker turnover as a good thing. Still, it is tricky here when the whole benefit of the agreement is to reduce turnover and encourage a better relationship between workers and firms.

The FTC also points to three studies that find that banning noncompetes increases innovation, according to a few different measures. I won’t say anything about these because you can infer my reaction based on what I will say below on wage studies. If anything, I’m more skeptical of innovation studies, simply because I don’t think we have a good understanding of what causes innovation generally, let alone how to measure the impact of noncompetes on innovation. You can read what the FTC cites on innovation and make up your own mind.

From Academic Research to an FTC Ban

Now that we understand some of the papers, how do we move to policy?

Let’s assume I read the evidence basically as the FTC does. I don’t, and will explain as much in a future paper, but that’s not the debate for this post. How do we think about the optimal policy response, given the evidence?

There are two main reasons I am not ready to extrapolate from the research to the proposed ban. Every economist knows them: the dreaded pests of external validity and general equilibrium effects.

Let’s consider external validity through the Oregon ban paper and the Hawaii tech ban paper. Again, these are not critiques of the papers, but of how the FTC wants to move from them to a national ban.

Notice above that I said the Oregon ban went into effect in 2008, which means it happened as the whole country was entering a major recession and financial crisis. The authors do their best to deal with differential responses to the recession, but every state in their data went through a recession. Did the recession matter for the results? It seems plausible to me.

Another important detail about the Oregon ban is that it only applied to hourly workers, while the FTC rule would apply to all workers. You can’t just confidently assume hourly workers are just like salaried workers. Hourly workers who sign noncompetes are less likely to read them, less likely to consult with their family about them, and less likely to negotiate over them. If part of the problem with noncompetes is that people don’t understand them until it is too late, you will overstate the harm if you just look at hourly workers who understand noncompetes even less than salaried workers. Also, with a partial ban, Lipsitz & Starr recognize that spillovers matter and firms respond in different ways, such as converting workers to salaried to keep the noncompete, which won’t exist with a national ban. It’s not the same experiment at a national scale. Which way will it change? How confident are we?

The effects of the Hawaii ban are likely not the same as the FTC one would be. First of all, Hawaii is weird. It has a small population, and tech is a small part of the state’s economy. The ban even excluded telecom from within the tech sector. We are talking about a targeted ban. What does the Hawaii experiment tell us about a ban on noncompetes for tech workers in a non-island location like Boston? What does it tell us about a national ban on all noncompetes, like the FTC is proposing? Maybe these things do not matter. To further complicate things, the policy change included a ban on nonsolicitation clauses. Maybe the nonsolicitation clause was unimportant. But I’d want more research and more policy experimentation to tease out these details.

As you dig into these papers, you find more and more of these issues. That’s not a knock on the papers but an inherent difficulty in moving from research to policy. It’s further compounded by the fact that this empirical literature is still relatively new.

What will happen when we scale these bans up to the national level? That’s a huge question for any policy change, especially one as large as a national ban. The FTC seems confident in what will happen, but moving from micro to macro is not trivial. Macroeconomists are starting to really get serious about how the micro adds up to the macro, but it takes work.

I want to know more. Which effects are amplified when scaled? Which effects drop off? What’s the full National Income and Product Accounts (NIPA) accounting? I don’t know. No one does, because we don’t have any of that sort of price-theoretic, general equilibrium research. There are lots of margins that firms will adjust on. There’s always another margin that firms will adjust that we are not capturing. Instead, what the FTC did is a simple linear extrapolation from the state studies to a national ban. Studies find a 3% wage effect here. Multiply that by the number of workers.

When we are doing policy work, we would also like some sort of welfare analysis. It’s not just about measuring workers in isolation. We need a way to think about the costs and benefits and how to trade them off. All the diff-in-diff regressions in the world won’t get at it; we need a model.

Luckily, we have one paper that blends empirics and theory to do welfare analysis.[2] Liyan Shi has a paper forthcoming in Econometrica—which is no joke to publish in—titled “Optimal Regulation of Noncompete Contracts.” In it, she studies a model meant to capture the tradeoff between encouraging a firm’s investment in workers and reducing labor mobility. To bring the theory to data, she scrapes data on U.S. public firms from Securities and Exchange Commission filings and merges those with firm-level data from Compustat, plus some others, to get measures of firm investment in intangibles. She finds that when she brings her model to the data and calibrates it, the optimal policy is roughly a ban on noncompetes.

It’s an impressive paper. Again, I’m unsure how much to take from it to extrapolate to a ban on all workers. First, as I’ve written before, we know publicly traded firms are different from private firms, and that difference has changed over time. Second, it’s plausible that CEOs are different from other workers, and the relationship between CEO noncompetes and firm-level intangible investment isn’t identical to the relationship between mid-level engineers and investment in that worker.

Beyond particular issues of generalizing Shi’s paper, the larger concern is that this is the paper that does a welfare analysis. That’s troubling to me as a basis for a major policy change.

I think an analogy to taxation is helpful here. I’ve published a few papers about optimal taxation, so it’s an area I’ve thought more about. Within optimal taxation, you see this type of paper a lot. Here’s a formal model that captures something that theorists find interesting. Here’s a simple approach that takes the model to the data.

My favorite optimal-taxation papers take this approach. Take this paper that I absolutely love, “Optimal Taxation with Endogenous Insurance Markets” by Mikhail Golosov & Aleh Tsyvinski.[3] It is not a price-theory paper; it is a Theory—with a capital T—paper. I’m talking lemmas and theorems type of stuff. A bunch of QEDs and then calibrate their model to U.S. data.

How seriously should we take their quantitative exercise? After all, it was in the Quarterly Journal of Economics and my professors were assigning it, so it must be an important paper. But people who know this literature will quickly recognize that it’s not the quantitative result that makes that paper worthy of the QJE.

I was very confused by this early in my career. If we find the best paper, why not take the result completely seriously? My first publication, which was in the Journal of Economic Methodology, grew out of my confusion about how economists were evaluating optimal tax models. Why did professors think some models were good? How were the authors justifying that their paper was good? Sometimes papers are good because they closely match the data. Sometimes papers are good because they quantify an interesting normative issue. Sometimes papers are good because they expose an interesting means-ends analysis. Most of the time, papers do all three blended together, and it’s up to the reader to be sufficiently steeped in the literature to understand what the paper is really doing. Maybe I read the Shi paper wrong, but I read it mostly as a theory paper.

One difference between the optimal-taxation literature and the optimal-noncompete policy world is that the Golosov & Tsyvinski paper is situated within 100 years of formal optimal-taxation models. The knowledgeable scholar of public economics can compare and contrast. The paper has a lot of value because it does one particular thing differently than everything else in the literature.

Or think about patent policies, which was what I compared noncompetes to in my original post. There is a tradeoff between encouraging innovation and restricting monopoly. This takes a model and data to quantify the trade-off. Rafael Guthmann & David Rahman have a new paper on the optimal length of patents that Rafael summarized at Rafael’s Commentary. The basic structure is very similar to the Shi or Golosov &Tsyvinski papers: interesting models supplemented with a calibration exercise to put a number on the optimal policy. Guthmann & Rahman find four to eight years, instead of the current system of 20 years.

Is that true? I don’t know. I certainly wouldn’t want the FTC to unilaterally put the number at four years because of the paper. But I am certainly glad for their contribution to the literature and our understanding of the tradeoffs and that I can position that number in a literature asking similar questions.

I’m sorry to all the people doing great research on noncompetes, but we are just not there yet with them, by my reading. For studying optimal-noncompete policy in a model, we have one paper. It was groundbreaking to tie this theory to novel data, but it is still one welfare analysis.

My Priors: What’s Holding Me Back from the Revolution

In a world where you start without any thoughts about which direction is optimal (a uniform prior) and you observe one paper that says bans are net positive, you should think that bans are net positive. Some information is better than none and now you have some information. Make a choice.

But that’s not the world we live in. We all come to a policy question with prior beliefs that affect how much we update our beliefs.

For me, I have three slightly weird priors that I will argue you should also have but currently place me out of step with most economists.

First, I place more weight on theoretical arguments than most. No one sits back and just absorbs the data without using theory; that’s impossible. All data requires theory. Still, I think it is meaningful to say some people place more weight on theory. I’m one of those people.

To be clear, I also care deeply about data. But I write theory papers and a theory-heavy newsletter. And I think these theories matter for how we think about data. The theoretical justification for noncompetes has been around for a long time, as I discussed in my original post, so I won’t say more.

The second way that I differ from most economists is even weirder. I place weight on the benefits of existing agreements or institutions. The longer they have been in place, the more weight I place on the benefits. Josh Hendrickson and I have a paper with Alex Salter that basically formalized the argument from George Stigler that “every long-lasting institution is efficient.” When there are feedback mechanisms, such as with markets or democracy, the resulting institutions are the result of an evolutionary process that slowly selects more and more gains from trade. If they were so bad, people would get rid of them eventually. That’s not a free-market bias, since it also means that I think something like the Medicare system is likely an efficient form of social insurance and intertemporal bargaining for people in the United States.

Back to noncompetes, many companies use noncompetes in many different contexts. Many workers sign them. My prior is that they do so because a noncompete is a mutually beneficial contract that allows them to make trades in a world with transaction costs. As I explained in a recent post, Yoram Barzel taught us that, in a world with transaction costs, people will “erect social institutions to impose and enforce the restraints.”

One possible rebuttal is that noncompetes, while existing for a long time, have only become common in the past few decades. That is not very long-lasting, and so the FTC ban is a natural policy response to a new challenge that arose and the discovery that these contracts are actually bad. That response would persuade me more if this were a policy response brought about by a democratic bargain instead of an ideological agenda pushed by the chair of the FTC, which I think is closer to reality. That is Earl Thompson and Charlie Hickson’s spin on Stigler’s efficient institutions point. Ideology gets in the way.

Finally, relative to most economists, I place more weight on experimentation and feedback mechanisms. Most economists still think of the world through the lens of the benevolent planner doing a cost-benefit analysis. I do that sometimes, too, but I also think we need to really take our own informational limitations seriously. That’s why we talk about limited information all the time on my newsletter. Again, if we started completely agnostic, this wouldn’t point one way or another. We recognize that we don’t know much, but a slight signal pushes us either way. But when paired with my previous point about evolution, it means I’m hesitant about a national ban.

I don’t think the science is settled on lots of things that people want to tell us the science is settled on. For example, I’m not convinced we know markups are rising. I’m not convinced market concentration has skyrocketed, as others want to claim.

It’s not a free-market bias, either. I’m not convinced the Jones Act is bad. I’m not convinced it’s good, but Josh has convinced me that the question is complicated.

Because I’m not ready to easily say the science is settled, I want to know how we will learn if we are wrong. In a prior Truth on the Market post about the FTC rule, I quoted Thomas Sowell’s Knowledge and Decisions:

In a world where people are preoccupied with arguing about what decision should be made on a sweeping range of issues, this book argues that the most fundamental question is not what decision to make but who is to make it—through what processes and under what incentives and constraints, and with what feedback mechanisms to correct the decision if it proves to be wrong.

A national ban bypasses this and severely cuts off our ability to learn if we are wrong. That worries me.

Maybe this all means that I am too conservative and need to be more open to changing my mind. Maybe I’m inconsistent in how I apply these ideas. After all, “there’s always another margin” also means that the harm of a policy will be smaller than anticipated since people will adjust to avoid the policy. I buy that. There are a lot more questions to sort through on this topic.

Unfortunately, the discussion around noncompetes has been short-circuited by the FTC. Hopefully, this post gave you tools to think about a variety of policies going forward.

[1] The U.S. Bureau of Labor Statistics now collects data on noncompetes. Since 2017, we’ve had one question on noncompetes in the National Longitudinal Survey of Youth 1997. Donna S. Rothstein and Evan Starr (2021) also find that noncompetes cover around 18% of workers. It is very plausible this is an understatement, since noncompetes are complex legal documents, and workers may not understand that they have one.

[2] Other papers combine theory and empirics. Kurt Lavetti, Carol Simon, & William D. White (2023), build a model to derive testable implications about holdups. They use data on doctors and find noncompetes raise returns to tenure and lower turnover.

[3] It’s not exactly the same. The Golosov & Tsyvinski paper doesn’t even take the calibration seriously enough to include the details in the published version. Shi’s paper is a more serious quantitative exercise.